Rate Limiting: Concepts, Algorithms, and Real-World Use Cases

A deep-dive into rate limiting and how to implement it in a system design interview. Including real-world use cases and examples!

Once again, with another recap from what I read around rate limiters from the

book. At the end of the book, I will share all my notes on each chapter, so stay subscribed for that soon! 🔥That said, on the topic of rate limiters and to generalize - they play a crucial role in maintaining system stability, preventing abuse, and ensuring fair resource allocation.

So, let's check what rate limiters are, why they're essential, and how to design them effectively. 👇

What is a Rate Limiter?

A rate limiter is a tool that controls the rate of traffic sent or received by a network interface controller (for example, an API).

It's used to prevent DoS (Denial of Service) attacks, manage API usage, and ensure system stability by limiting the number of client requests allowed to be sent over a specified period

Why Use Rate Limiters?

Prevent DoS Attacks

This comes as a by-product of the limit we enforce on the number of requests a client can make in a given timeframe. Malicious users won’t be able to make thousands or millions of requests/second as we would limit them 🤷♂️

Resource Management

Image a scenario where you application has biig spikes of traffic. For example in e-com - Black Friday is happening, or all of a sudden your website goes viral. Utilizing some rate limiting techniques we can effectively handle such spikes and manage our server resources efficiently.

Cost Control

Take OpenAI for an example. We all now of the limit on requests or tokens we can use per minute/hour/request. All of these limits are enforced and modified based on the Pricing Tier you’re in with your plan. This is done through rate limiters.

Improved User Experience

With rate limiters we achieve better response times and availability for our system, because we prevent server overload.

… there are many more benefits, but these ☝️ are some of the most important ones!

Rate Limiting Algorithms

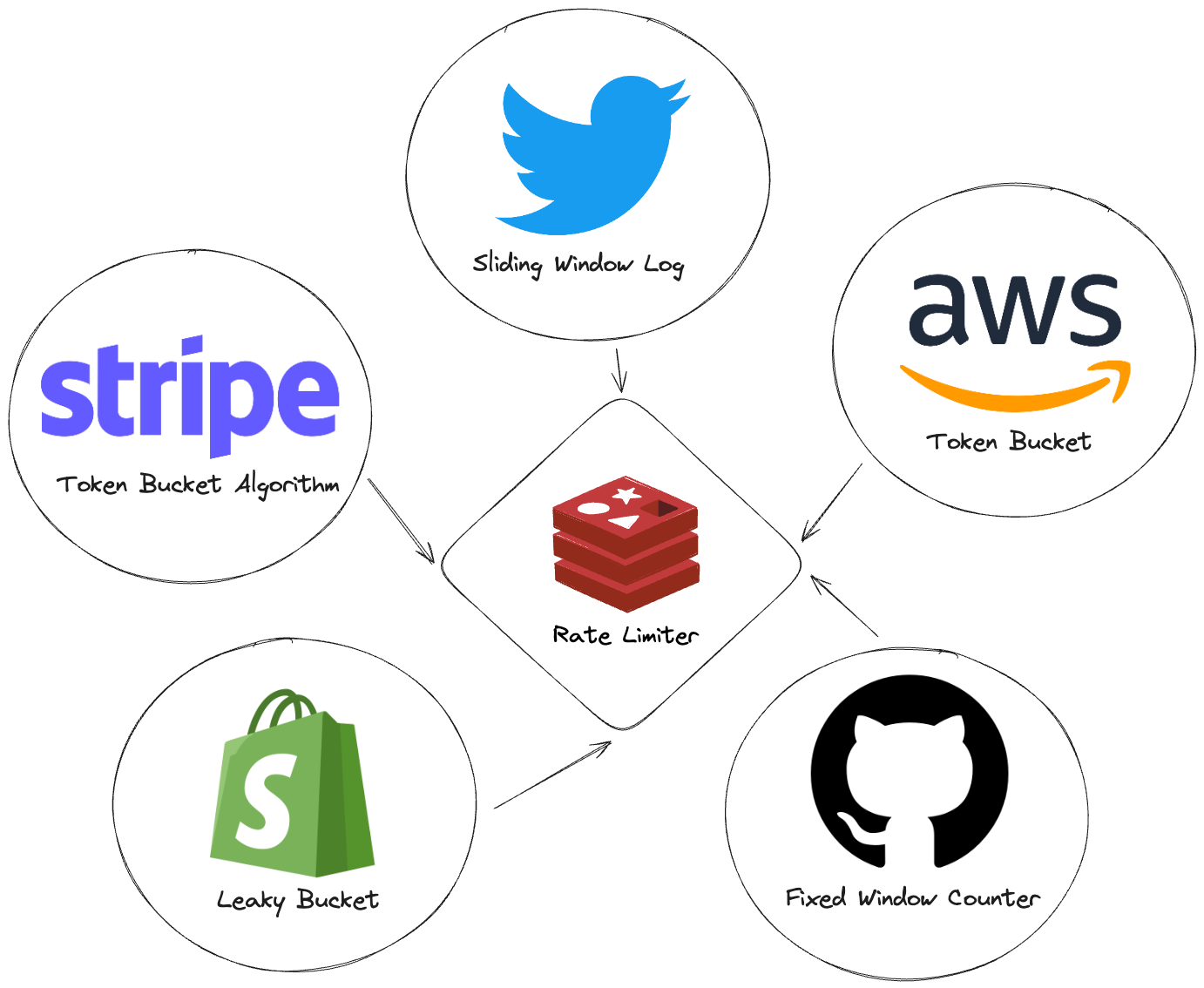

To implement a rate limiter in our system, we first need to decide what algorithm to use. To do so, we need to think of the different use-cases and limits we wanna enforce. An example is showcased here:

That said, let's explore some of the most common algorithms and why we should pick one over the other.

1. Token Bucket 🪣

How it works: Imagine a bucket filled with tokens. Each request consumes a token. If there are no tokens, the request is denied.

Example: 5 requests per minute. At 1:00, the bucket has 5 tokens. Two requests at 1:29 consume 2 tokens. At 2:00, the bucket refills to 5 tokens.

It’s used by companies like Stripe and Amazon, because of it’s ability to handle traffic spikes:

Stripe uses the Token Bucket algorithm to improve the availability and reliability of their API.

📌 More on their use case in this Stripe Blog-Post talking about exactly that!

AWS uses the Token Bucket algorithm for throttling EC2 API requests for each account on a per-region basis, which improves service performance and ensures fair usage for all Amazon EC2 customers.

📌 More on that in their blog-post here!

2. Leaky Bucket 💧

Similar to the Token Bucket, but uses a queue instead. It ensures a constant output rate, which is perfect for products like Shopify, which need to keep stable performance during Black Friday events!

📌 You can check this blog-post, talking more about how Shopify uses this algo for their rate limiting!

3. Fixed Window Counter 🗿

Divides time into fixed windows and allows a set number of requests per window. This make sit simple to implement and understand, making it easier for GitHub to communicate rate limits to its large developer community.

📌 You can easily implement it with Java and Redis for example. Check this impl!

Example: 5 requests per hour. Windows are 1AM, 2AM, 3AM, etc.

Potential issue: A user could make 5 requests at 1:59 AM and another 5 at 2:01 AM, effectively doubling the limit.

4. Sliding Window Log 🪵

This one addresses the Fixed Window Counter issue by tracking request timestamps. It provides more accurate rate limiting over time, which is essential for Twitter's real-time nature and high-volume API usage!

📌 More on their use of this algo here!

5. Sliding Window Counter 🪟

Combines fixed and sliding window approaches for more even distribution of requests.

📌 For more information & Redis implementation, check this out!

Didn’t find usages of it in real-world apps, though I am sure they exists. 😅

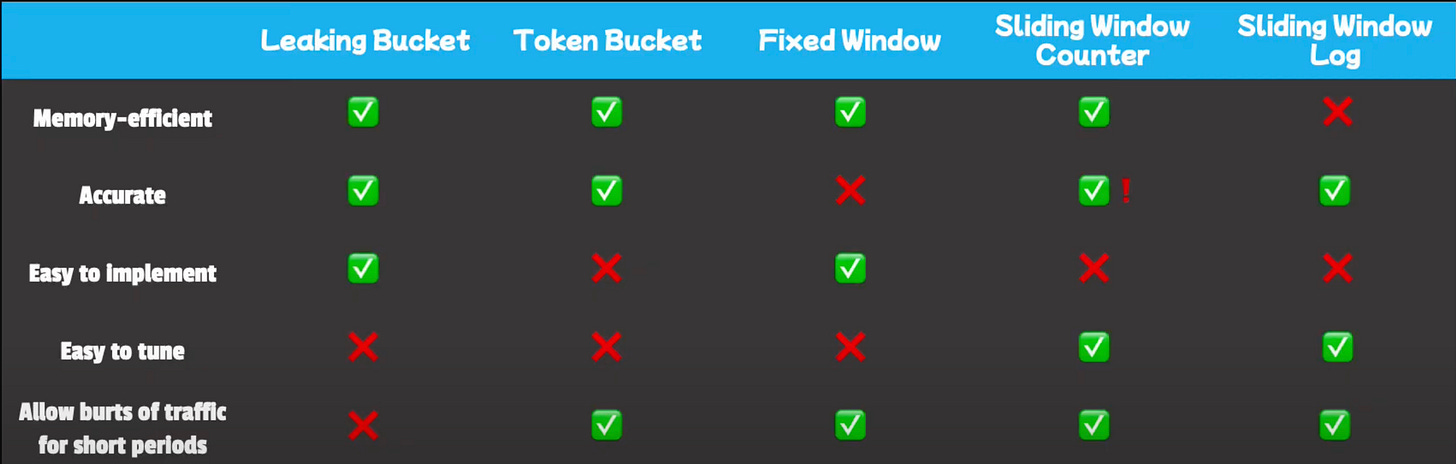

Overview

This table gives a good PROs and CONs breakdown to each of the algorithms! You decide which algorithm fits best for your needs.

Single Vs. Multi Threaded Rate Limiter

When designing rate limiters, it's crucial to consider the differences between single-threaded and multi-threaded environments.

⚠️ Generally, if you expect your app to face high-concurrency rates and accuracy of counters is something you can sacrifice to a certain extend, opt for the multi-threaded approach. Otherwise, you may wanna pick the single-threaded rate limiter as it offers simplicity and ease of maintaining consistent state.

Single-Threaded Rate Limiter

simple and easy to understand.

may become a bottleneck in high-concurrency environments

it’s easy to maintain a consistent state of the rate limiter (compared to the multi-threaded approach)

great accuracy of the counters/timestamps..

Multi-Threaded Rate Limiter

it can handle multiple requests simultaneously - a must in modern systems

it requires careful synchronization to maintain accuracy

potential of race conditions - may result in incorrect counting

rate limiting across different nodes (i.e. in a distributed env.) requires us to keep a centralized stores of the counter like Redis, to maintain the consistency

this approach may sacrifice accuracy for better performance

☝️ These are just some consideration to take into account when designing or implementing a rate limiter in your next interview, project or job.

System Design for Rate Limiters

When designing a rate limiter, consider the following aspects:

Location in the System

Client-Side: Not ideal as it can be manipulated by users.

Server-Side: More secure and typical. Can be implemented:

Directly in the application

As middleware (e.g., part of an API Gateway)

High-Level Design

Client sends a request

Rate limiter checks the counter in Redis

If within limit, increment counter and pass to server

If over limit, return 429 (Too Many Requests) error

👉 Deep-Dive Design Considerations

Here, think of:

Rule Configuration: Use YAML or JSON files to store rules. Update periodically via a background job.

User Feedback: Use HTTP headers to inform users about their limit status:

X-Ratelimit-Remaining

X-Ratelimit-Limitation

X-Ratelimit-RetryAfter

Race Conditions: Use Redis sorted sets to handle concurrent requests accurately.

Synchronization: In distributed systems, use a managed Redis instance for synchronized data across nodes.

Monitoring & Logging: Implement proper logging and monitoring to validate if rate limiting is working as expected or to detect and respond to unusual traffic patterns

Real-World Use Cases

You can see more on that in the ‘Rate Limiting Algorithms’ section, where I give an example and link to the actual company blog-post regarding each usage of rate limiting. That said, as a summary:

API Management: Companies like Twitter use rate limiting to manage their API usage. They limit users to 300 requests per 3-hour window for certain endpoints.

E-commerce Platforms: During flash sales, platforms like Amazon implement strict rate limiting to ensure fair access and prevent system overload.

Social Media: Instagram limits the number of likes, follows, and comments a user can make per hour to prevent spam and bot activity.

Financial Services: Trading platforms use rate limiters to control the frequency of trades and potentially prevent market manipulation.

Conclusion

Rate limiters are an essential tool in the engineer’s toolbox!

There are some core algorithms that can be used to implement a rate limiter that best serves your needs.

Be careful with multi-threaded implementations of rate limiters - there are a lot of problems we need to deal with there.

Many companies, if not most, use some sort of rate limiting.

Once again, the inspiration for this blog-post came from a chapter in

‘s book ‘System Design Interview’ by 🙌I hope this was an interesting read for all of you & I am very much looking forward to my next blog-post, which will most probably be on the topic of ‘Consistent Hashing`!

Have a productive week & keep on studying! 🚀