Guaranteeing Message Delivery In Distributed Systems: The Transactional Outbox/Inbox Pattern

Learn how to achieve guaranteed delivery and exactly-once processing of events in a micro-services architecture. Asked on interviews 🤝

in today’s software engineering landscape, everyone has heard of micro-services architecture

and if you’ve heard that and decided to check what it is, you most probably came across terms like ‘event-driven architecture’, ‘events’ and so on..

well, that’s because they are a crucial part of scaling an application.. No more ‘just call the API of the service’ type of communication

or at least, it’s reduced 😅, because there are trade-offs to everything and sometimes you need the response straightaway, which is not what you can achieve with events/messages

they are useful when you can deal with eventually consistent data, i.e. with some minimal delay to response

as soon as you move from direct API calls to event-driven, a whole new set of reliability challenges appear.

What if your service crashes after committing a database change, but before sending the event?

What if your message broker delivers the same event twice—or worse, loses it altogether?

How do you make sure that other services process every event, but only once?

that’s where the Outbox-Inbox Pattern comes in

let’s break down what the pattern is, why it matters, and how you can implement it in a modern Java stack using AWS SNS and SQS - with clear code examples

so, what is it?

the Outbox-Inbox Pattern is a reliable messaging design pattern used in distributed systems to ensure that messages/events between services are delivered at least once and without loss, even when failures (like crashes or network issues) occur.

we’ve used this in one of the companies I worked at & I just saw that Wise use it quite extensively - even making their impl into a public GH repo!

why do we need it?

Micro-service s often need to publish events (e.g., "UserCreated", "PaymentProcessed") after completing a local transaction..

.. and sending messages directly to a message broker/service (e.g., Kafka, SQS/SNS) inside a transaction can lead to inconsistencies:

The message might be sent but the transaction fails (duplicate/phantom event).

The transaction commits but the message fails to send (event lost).

then, what are the key benefits of it?

atomicity: message and data change are in the same transaction—no lost updates

reliability: survives crashes - events are persisted until sent/processed.

idempotency: receivers process events exactly once, even with duplicates.

but, how does it work?

let’s go through a brief run-down of how this patterns does it’s job of preserving messages and making sure they are delivered (with option to be delivered exactly-once)

it consists of two parts:

when the service publishes the event

when a service consumes the event

Outbox Pattern (Sender/Publisher side)

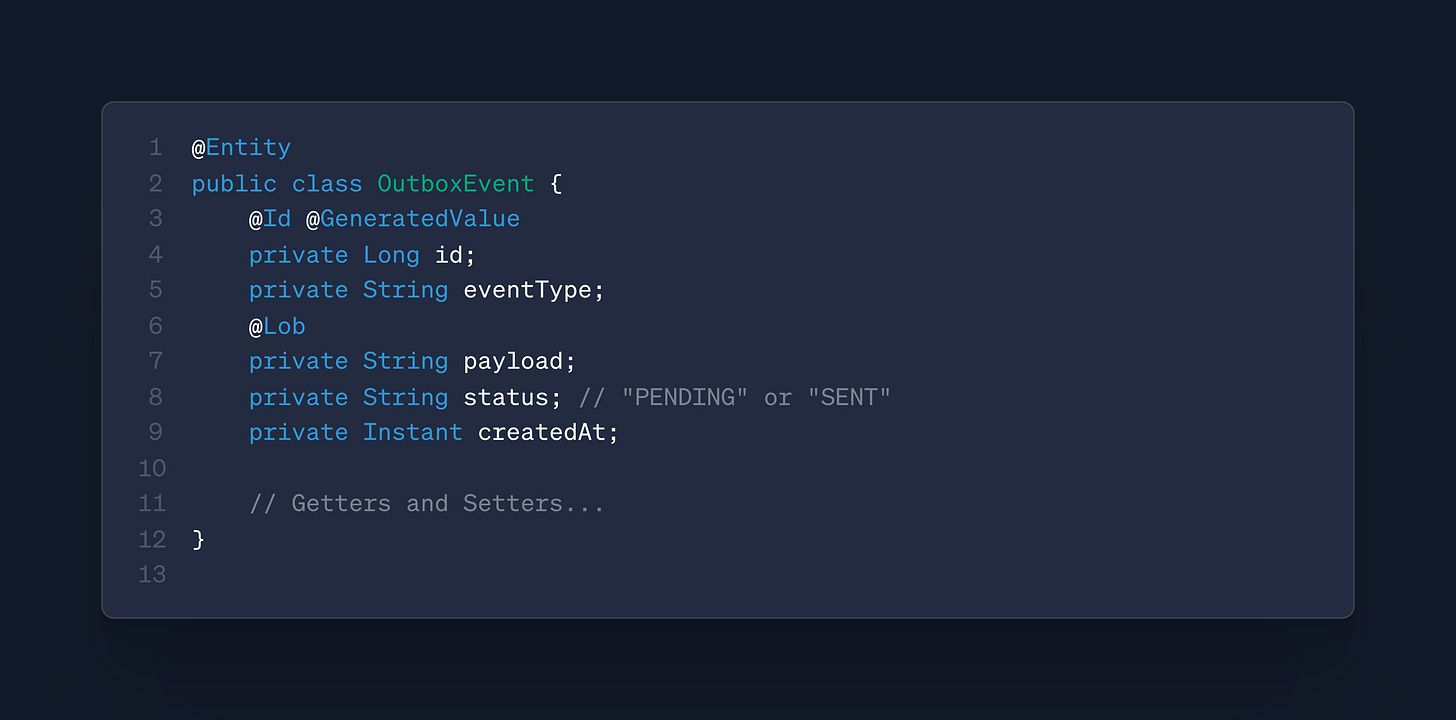

within the same DB transaction as your business operation (e.g., order placed), you write the event to an outbox table in your database.

a separate background process (or service) reads new records from the outbox table and publishes them to the message broker.

once published successfully, the record is marked as processed or deleted.

the guarantee: you never lose messages - even if your service crashes, because the outbox table acts as a buffer.

Inbox Pattern (Receiver/Consumer side)

when a service receives an event from the broker, it stores the event in its inbox table (idempotency table) before processing it

before handling an event, the service checks if it has already processed it (using event IDs in the inbox table)

if already processed, it ignores the event.. effectively preventing duplicates

the guarantee: even if the same event is delivered multiple times (due to "at-least-once" delivery), you process it only once

great, you have the theory now, but how will it look in code, in a real-world system?

let me show you 🤝

🚨 these are real-world style Java/Spring examples for the outbox, publisher, and inbox handling, using AWS SNS and SQS SDKs (with a little help from Spring annotations and JPA)

practical example with Java & AWS SQS/SNS

1st: the following is a Payment Service that saves the outbox event in a DB transaction (look @ Transactional annotation)

2nd: let’s build the publisher via which we can publishing the ‘PENDING’ events from the outbox table to a SNS topic 👇

after we got an entity, we need the actual publisher. This publisher can be invoked by a separate thread or worker in the application, that takes the batch of events and publishes them in some pre-defined intervals.

📝 a note about this: you can actually made the rate at which this worker thread processes events based on the load and stress-testing you do in your application. We did have this configurable in our micro-service architecture!

3th: and know how would a service consume those events - i.e. the inbox part of the pattern

you can use Spring Cloud AWS for SQS message listeners (and for most AWS related tasks tbh)

once again, we need to define an entity for the InboxEvent, which must align with the structure and what you’d expect form the service that publishes it.. i.e. monitor which are fields are mandatory or optional!

and now moving to the actual processor of the events 👇

❗️ storing the events, upon consuming them, makes sure we don’t process an event twice (you might here this as ‘dedup’-ing the event).. that’s crucial and one of the biggest PROs of this pattern 🤘

wrap-up of key considerations:

Idempotency:

use message IDs as the primary key in the inbox table for de-duplication.

Exactly-once:

SQS is at-least-once, but inbox dedupe gives you exactly-once semantics in your domain.

Scalability:

outbox/inbox tables can be pruned or archived over time for performance.

you can make the worker that publishes events form the outbox table configurable, so that each team can set their own pace of publishing events (be careful tho, and communicate this with the teams that plan to consume the event!)

i hope this post gave you the view of how would such pattern be implemented ina real organization with many micro-services.

i speak from experience and actually got asked about this in an interview recently, but failed to explain it how i wanted to..

hence this blog-post idea came

don’t be like me. understand this pattern if you are aiming for companies that use events to communicate on the backend!

that said, have a wonderful res of the week! 🚀

Hey Konstantin, great post!

I was wondering about the consumption of messages from the queue:

In the current example you first check if the message exists by id and then return if it does.

Isn't it theoretically possible for the same message to arrive twice at the same time, thus bypassing the 'existsbyid' check twice and therefore - executing the business logic twice?

Is this something you would want to consider in a production app?