Claude Agent Teams: Why AI Coding Is About to Feel Like Managing a Real Engineering Squad

Building multi-agent coding systems that plan, review, and ship like real engineers

most people still use AI coding tools like this:

One long prompt.

One giant conversation.

One “super engineer” doing everything.

Backend, frontend, tests, refactors, docs - all crammed into a single context window.

it works… until it doesn’t. because this approach is prone to failure.

context spills.

decisions get forgotten.

you end up re-explaining things the model already “knew”.

while researching Claude Code deeply over the last weeks, one thing became very clear to me:

the problem isn’t prompt quality. the problem is organizational structure.

and that’s exactly what Claude Agent Teams introduced just now 🔥

helpful links to places I sourced my info from:

The Big Mental Shift: From Prompting to Orchestration

we usually ask:

“How do I make the AI smarter?”

Claude Agent Teams nudges you toward a better question:

“How do I structure the work so intelligence compounds instead of collapsing?”

in real software companies, they don’t hire one engineer to:

design the system

write backend and frontend

test everything

coordinate changes

well, in some they do 😅.. but that’s needer sustainable or okay

so to tackle that (or what 99% of companies still do).. they have teams with roles.

Claude Agent Teams applies that exact idea to AI coding.

Think in Salaries, Not Tokens 💰

here’s the analogy that made everything click (and engaging 😄) for me .

p.s. i bet after some more years, tokens for these agent teams would cost more than hiring actual devs 👀

now, imagine a small product squad:

Team lead / Architect – ~€160k

- breaks down work, coordinates, reviews decisionsBackend Engineer – ~€120k

- APIs, DB models, business logicFrontend Dev – ~€100k

- UI, state management, UX flowsQA – ~€90k

- test suites, regressions, edge cases

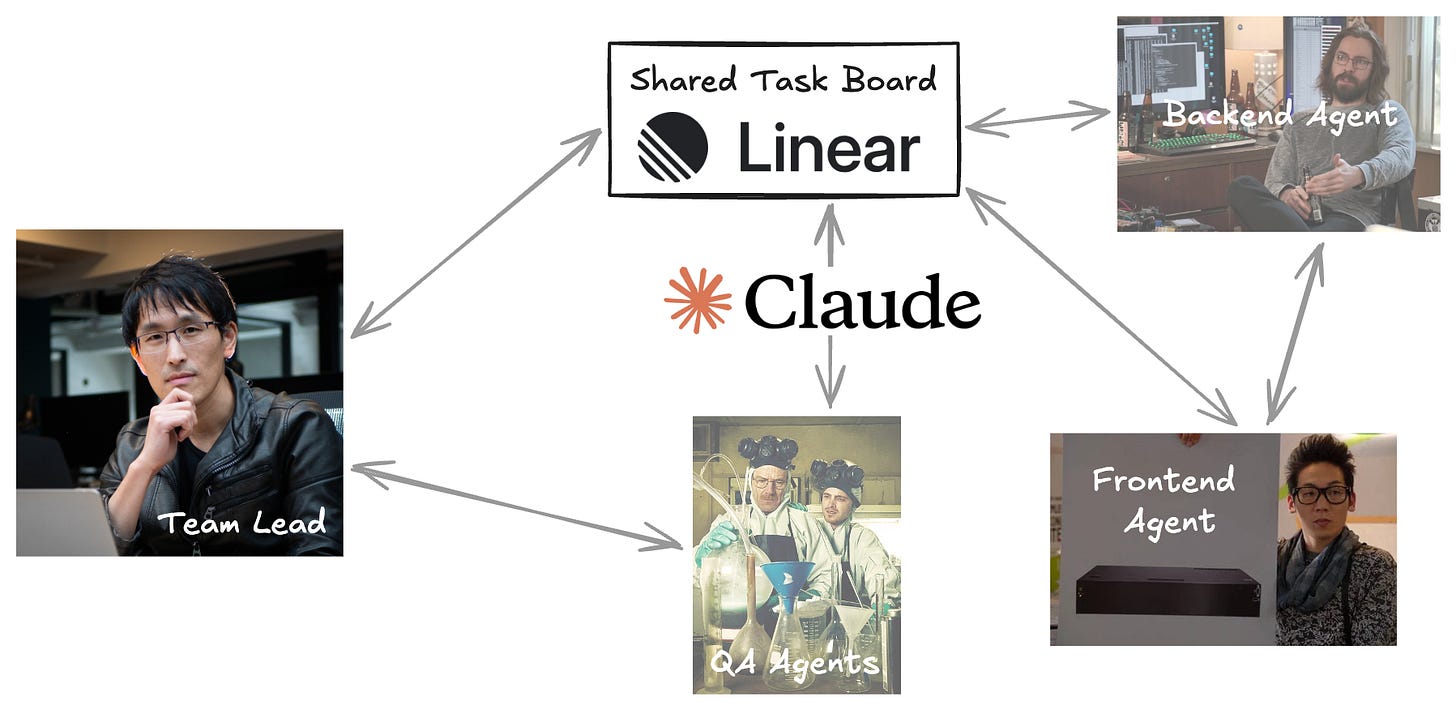

Claude Agent Teams maps shockingly well onto this model:

The lead runs on a stronger (more expensive) model (sonner/opus?)

Teammates run on cheaper, focused models (haiku?)

work is tracked via a shared task board (like Jira/Linear..)

communication happens via inboxes (like in Slack)

instead of paying one “super AI” to do everything sequentially, you build a balanced AI org. 👇

✔️ same budget

✔️ higher throughput

✔️ less context switching

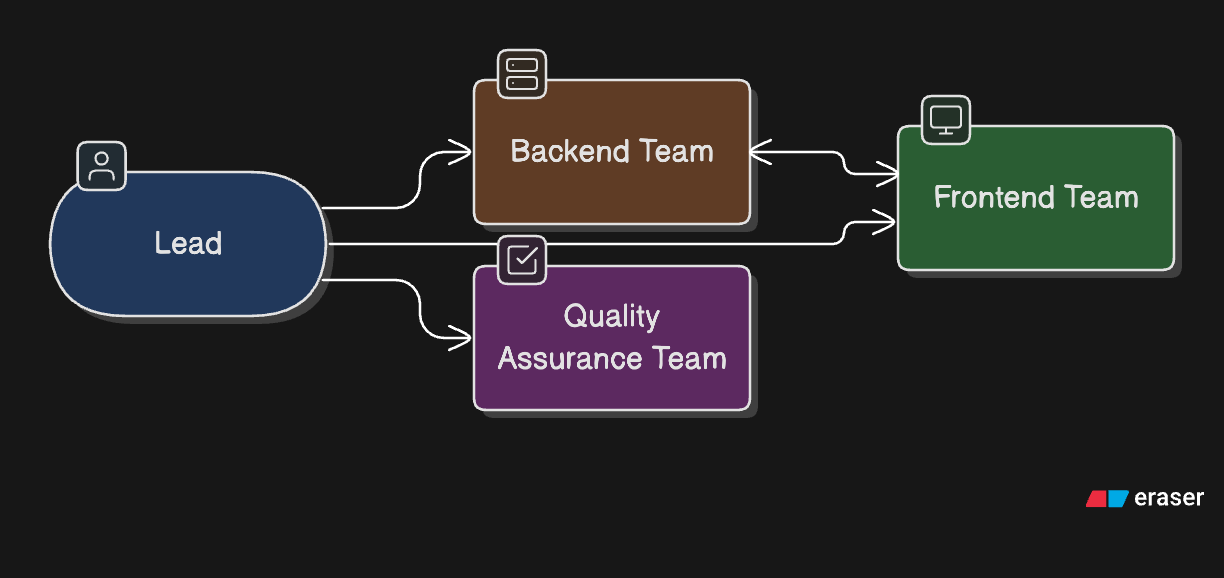

What Claude Agent Teams Actually Are

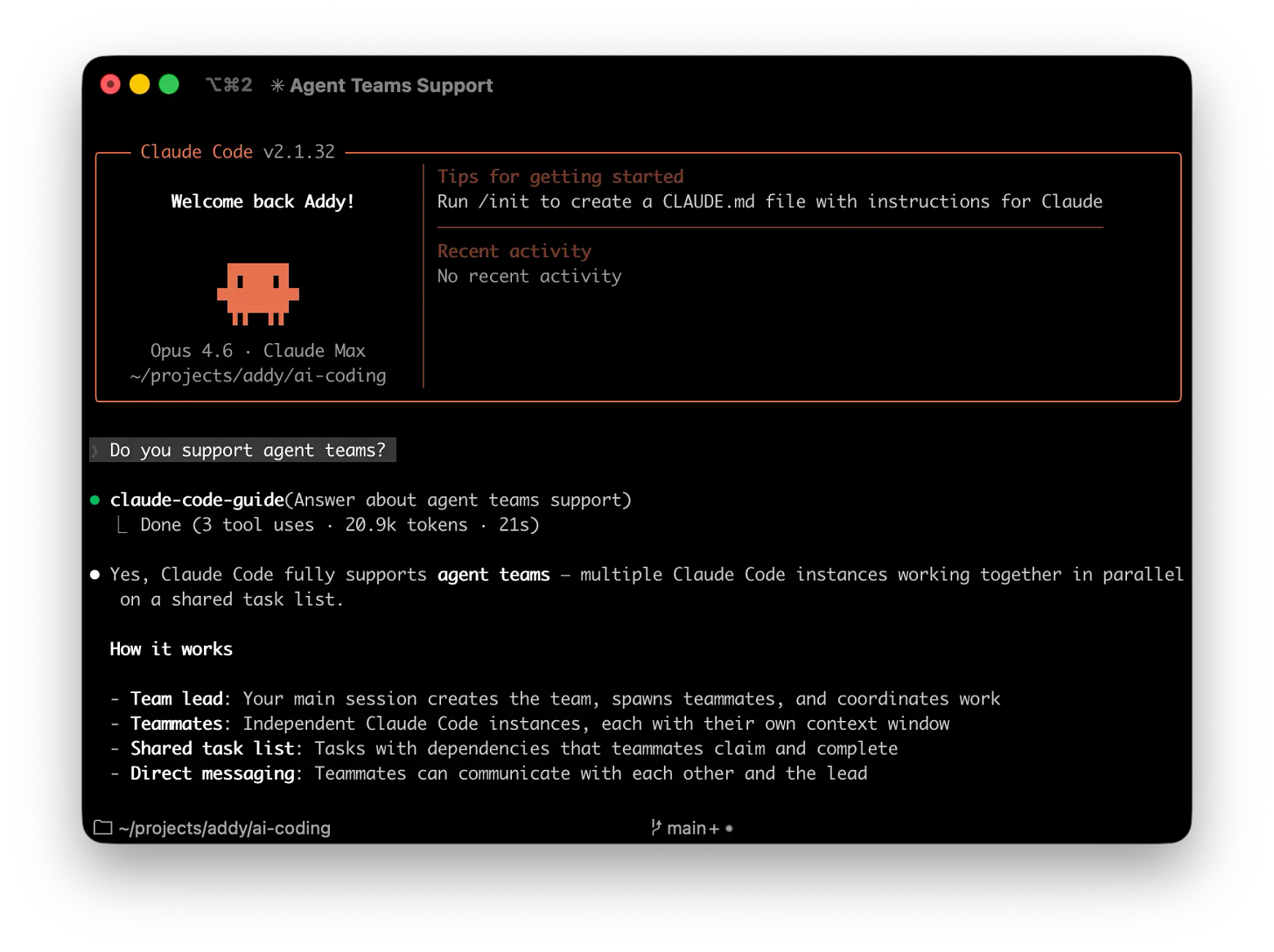

Claude Agent Teams is the newest feature in Claude Code that allows multiple Claude agents to collaborate on the same project

each team has:

A team lead

Your main Claude Code session. It spawns agents, assigns tasks, and synthesizes results.Teammates

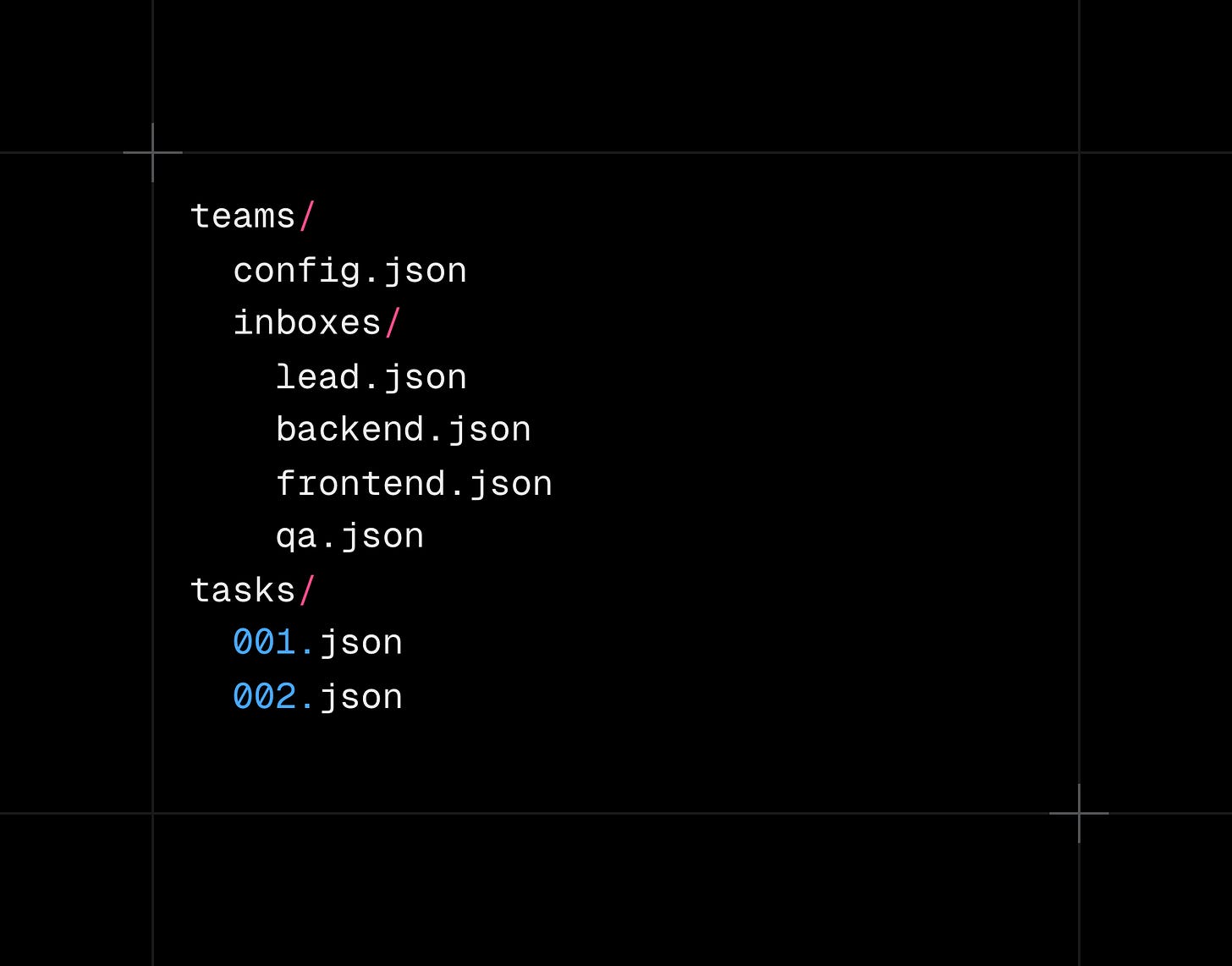

Independent Claude Code sessions with their own context windows and role-specific instructions.A shared task list

A file-backed task board with task states and dependencies.A mailbox system

Agents send each other structured messages by appending JSON to inbox files.

you’re not “chatting harder”. you’re designing a system.

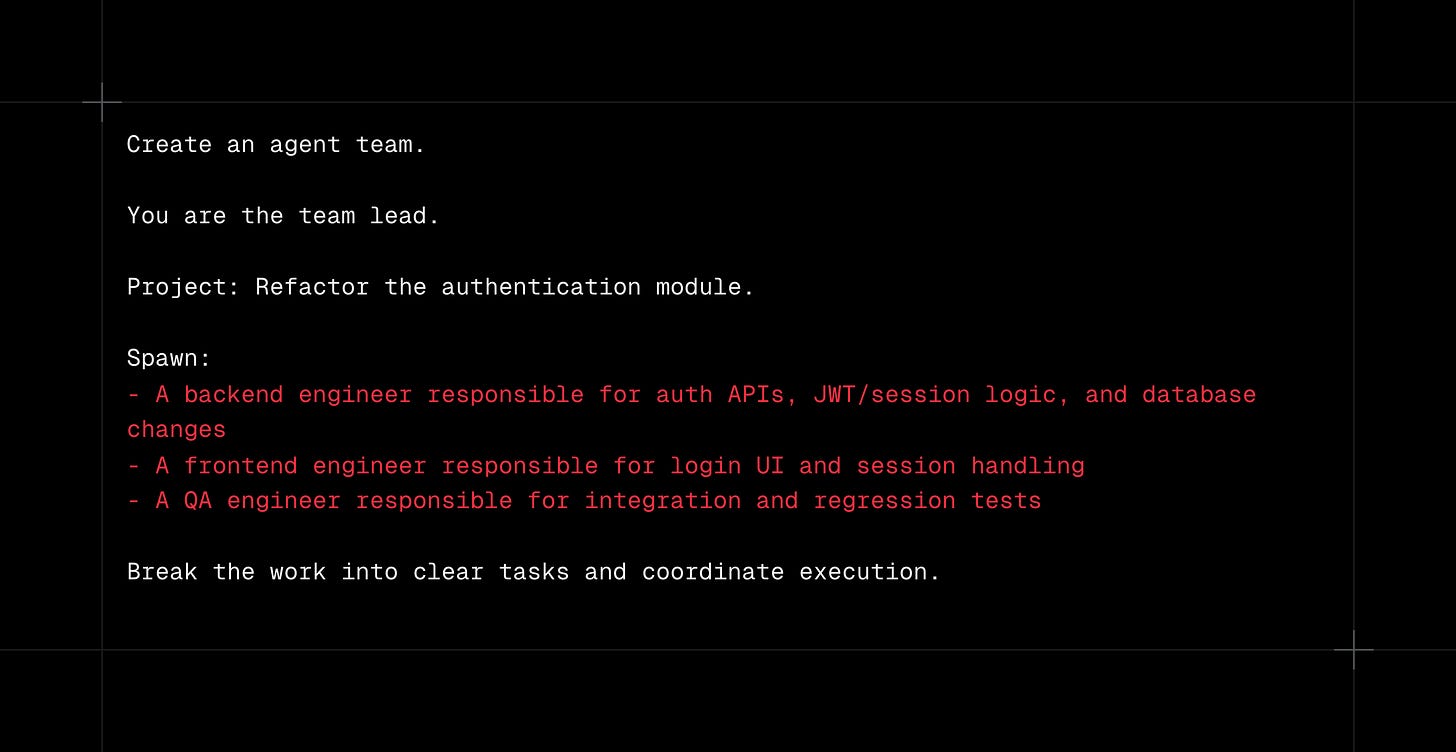

Copy-Paste: Spawning Your First Team

here’s a minimal but powerful Claude Code prompt you can literally paste:

Claude will:

Spawn independent agents

Create a shared task list

Start coordination between roles

at this point, your job shifts from prompting to orchestrating

Under the Hood: Why This Feels Like a Real Team

Claude Agent Teams are file-backed:

sending a message = appending JSON to an inbox

claiming a task = updating a task file

the lead’s inbox becomes a full audit log

this is AI collaboration designed like software, not chat 🚀

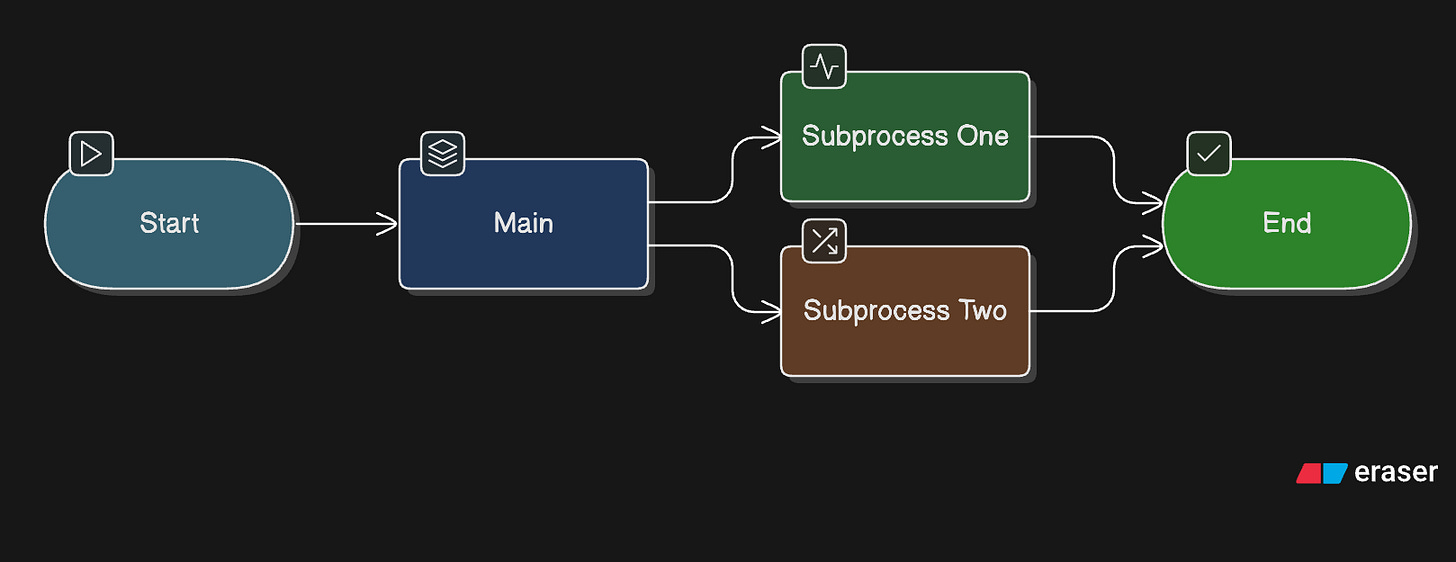

Agent Teams vs Subagents

Claude already had subagents - these are different.

Subagents

Run inside one session

Don’t talk to each other

Great for one-off tasks

Cheap and fast

Agent Teams

Each agent is a full session

Agents message each other directly

Designed for interdependent work

More expensive, much faster end-to-end

Subagents are function calls. Agent Teams are organizations.

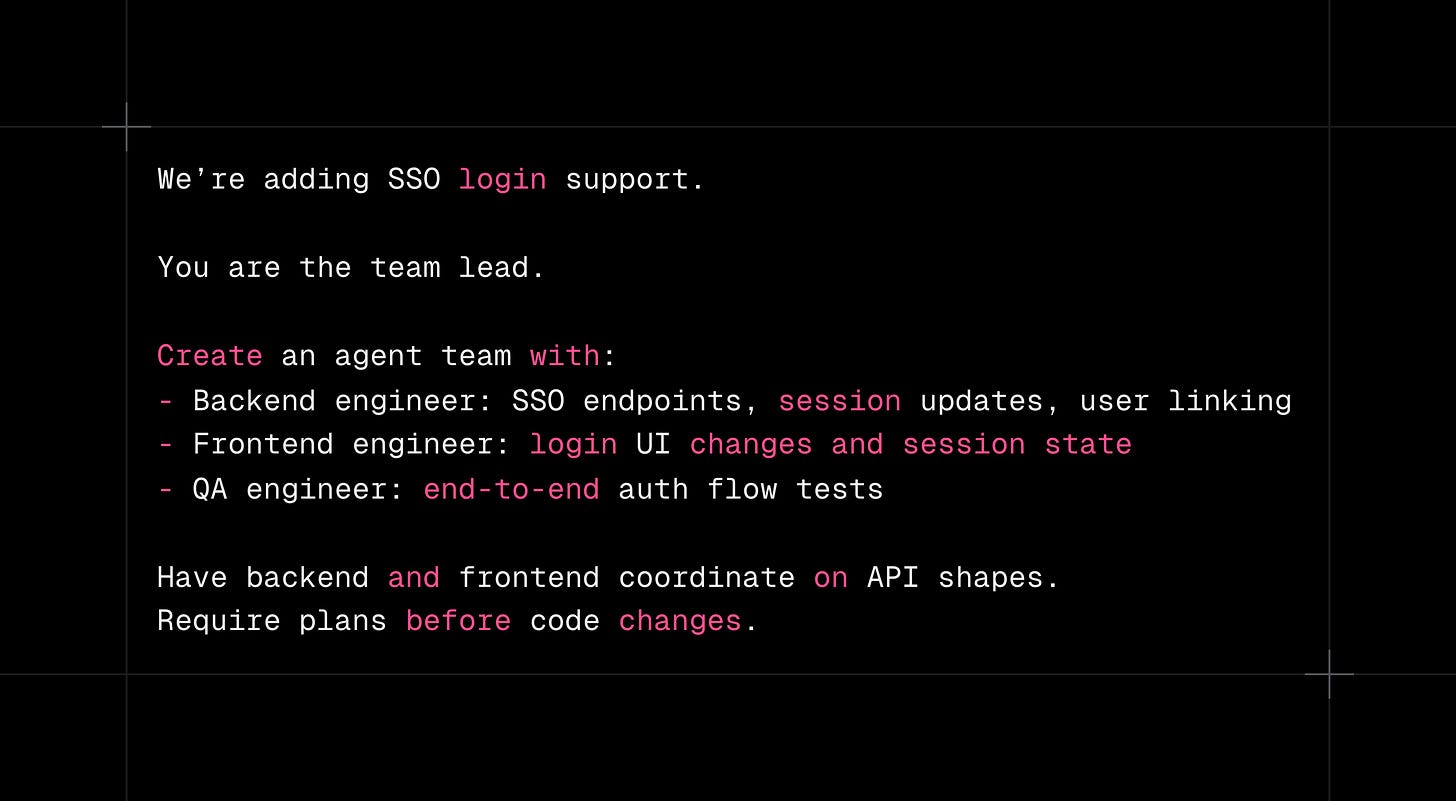

Example: Shipping SSO Authentication

an example Claude Code prompt:

What’s powerful here isn’t speed alone, it’s early alignment.

👉 Backend and frontend negotiate interfaces before code solidifies.

👉 QA raises edge cases before merge.

👉 The lead resolves conflicts at the system level.

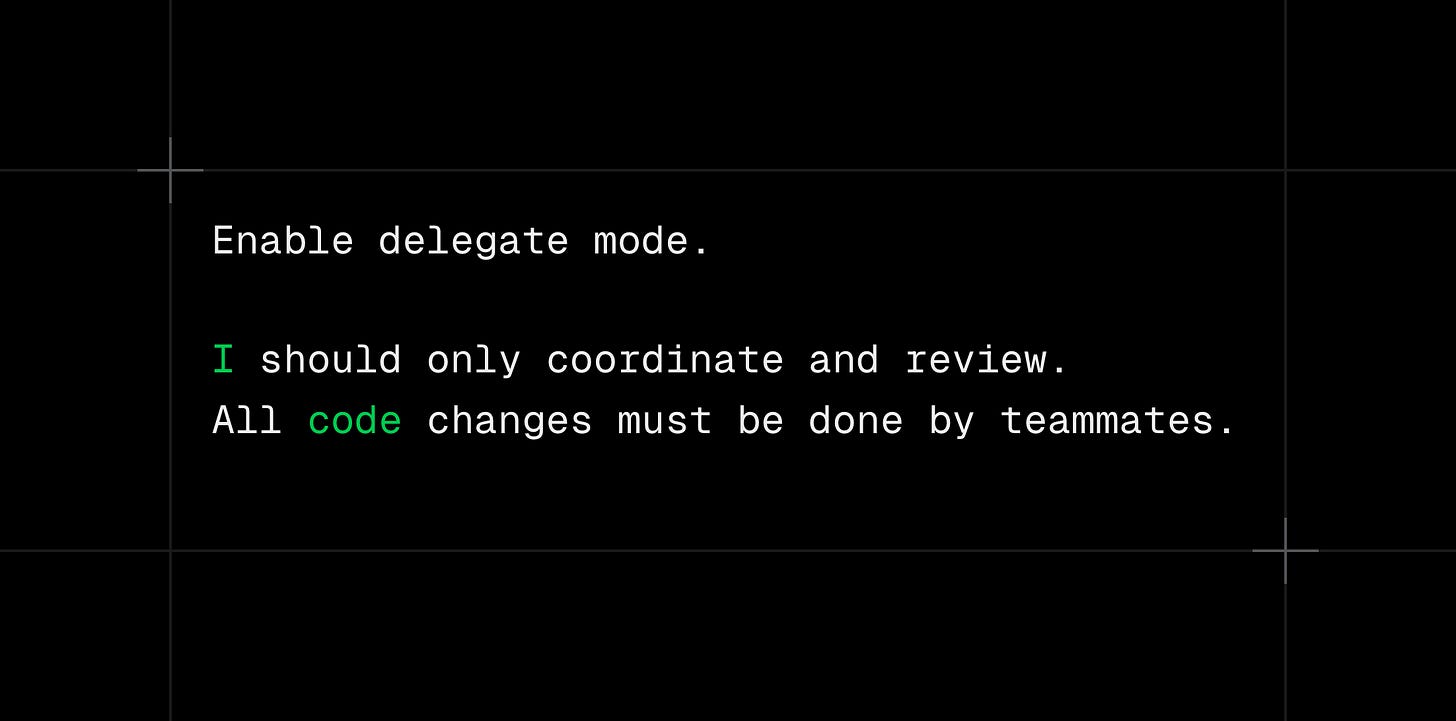

Delegate Mode: Think Like an EM

one feature that stood out strongly during research is delegate mode.

here is an example prompt:

this forces a clean separation:

you design and review

agents implement

It’s a subtle shift, but it pushes you into the right mental model:

AI as engineers, not magic.

When Agent Teams Make Sense

they’re great fits for:

multi-layer features

large refactors

parallel research + implementation

design debates

and they’re bad fits for:

small, isolated tasks

simple code generation

extreme cost sensitivity

🚨 Docs and community reports consistently note:

A team can cost ~3–4× the tokens of a single session 💰

some final thoughts..

this feature is brand new, so most people haven’t internalized what it enables yet.

but the direction is clear:

AI coding isn’t becoming about better prompts.

It’s becoming about better management.

if you learn to think in teams instead of prompts, you’ll be ahead of the curve. 🚀

follow me on LinkedIn @ Konstantin Borimechkov ❤️

thank you all for going through this blog-post and I hope it really gave you some value and food for thought next time you develop build software using AI!

let’s crush this next week! 🚀

The organizational structure angle is spot on. I've been managing a multi-agent setup for a couple months and the biggest surprise wasn't technical capability - it was how much it feels like managing actual junior engineers.

You write tasks, check their work, tell them when they're going down a rabbit hole. The failure modes are even similar: agents get stuck on details, sometimes ignore constraints, occasionally deliver exactly what you asked for but not what you meant.

Has anyone figured out good patterns for code review with agents? Right now I'm treating it like PR review, but that might not be the right mental model.